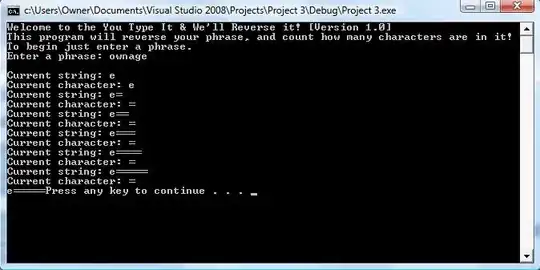

I have the foll. dataframe:

I want to add 2 new columns, region_1_code and region_2_code such that they are both 9 digit numbers. For region_1_code, it uniquely identifies each region_1 and is constructuted by first appending a 0 to the country_code is country_code is less than 100. Then the region_1's are alphabetically sorted and assigned a numeric code starting from 1 and followed by as many 0's as needed to reach a length of 6. Finally the country_code and the newly computed code are concatenated to get the region_1_code e.g. in this example, the region_1_code for region B is 880100000.

Similarly, region_2_code for region D will br 880100100. The final dataframe should look like this: